My Decade-Long Quest for Audio Reactive Visuals

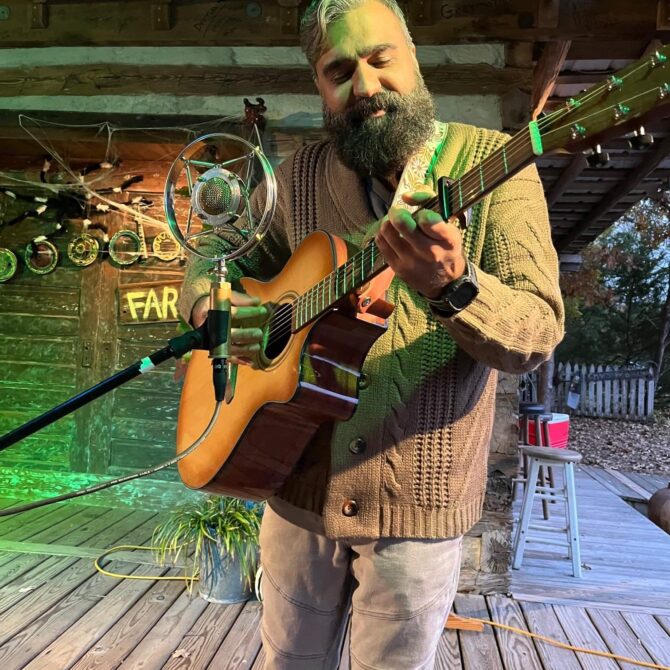

Some ideas just won’t leave you alone. They haunt the back of your mind, popping up during late-night drives or in the middle of a session. For me, that idea has always been about making the music seen. Not just a pre-made video playing behind the band, but a living, breathing visual that responds to every note, every hit, every ounce of expression coming from the stage.

This particular ghost has been rattling its chains since at least 2016.

The first time I thought I had it cornered was back in 2017. I was working with the phenomenal looping cellist, Brianna Tam, on her Bioluminescence show. The concept was to have live, audio-reactive animations projected on stage while she played. We hooked up my laptop running a program called Synesthesia, fed it a mic, and pointed a projector at the wall. And you know what? It was cool. For a minute. But there was a latency, a tiny but infuriating lag that made it feel like the visuals were chasing the music instead of dancing with it. The connection was missing.

So, the project went back on the shelf. A cool experiment, but not the breakthrough I was looking for.

Fast forward through… well, a lot. I’m finally ready to wrestle with this ghost again. I’ve been working weekly with my friend and resident tech wizard, Jim Willford, to map out a real battle plan. And what I’ve learned is that the landscape has gotten a whole lot bigger, and a whole lot more complicated.

Down the Rabbit Hole of VJ Software

Jim’s first move was to introduce MIDI into the equation, and with it, a whole universe of software that sent my head spinning.

- Synesthesia: This was my starting point. It’s brilliant for what it is—an out-of-the-box visual generator. You feed it audio or MIDI, and it spits out complex, trippy scenes. It’s fast and user-friendly, but I found its animations a bit limiting for what I wanted to build from scratch.

- Resolume Arena: This is the professional standard. Where Synesthesia is a pre-built synth, Resolume is a modular rig. It’s a blank canvas. You bring your own video clips, your own generative sources, and you build the entire visual response from the ground up. It’s powerful, intimidating, and gives you total freedom. It’s also… not cheap.

- The “Build-Your-Own-Universe” Tier: Then you have things like TouchDesigner, a node-based environment where you can literally build your own VJ software from scratch. And now, in late 2025, we have AI-first platforms like Runway and Kaiber that are starting to offer real-time, MIDI-influenced video generation. We’re talking about a future where my guitar could tell an AI to generate a rainy, neon-soaked city street in real-time.

See? Rabbit hole.

The Hardware Reality Check

My trusty Alienware 17 R4 laptop has been a workhorse, but we quickly identified its bottleneck: the internal GTX 1060 Mobile GPU. It’s fine for recording and mixing, but for driving multiple projectors with complex generative AI on top? It would melt.

The solution Jim found was beautifully nerdy: the Alienware Graphics Amplifier. It’s an external box that lets you plug a full-size, desktop graphics card into the laptop. Suddenly, a used RTX 3070 or a new 4060 could give my old machine the horsepower of a modern gaming rig, completely solving the performance problem.

The Breakthrough Wasn’t the Gear

We talked about graphics cards, projection mapping onto silos, and my pie-in-the-sky idea for an “Agentic AI” that could read pre-loaded lyrics and generate entire 3D scenes based on the mood of the song (we filed that under “Version 2.0, find a team of geniuses”).

But the real breakthrough, the thing that finally made it all click, was a simple concept.

I was thinking about it all wrong. My initial idea was basic: I play a C note, a picture of a cat appears. Cool, but kind of stupid. It’s a light switch. On, off.

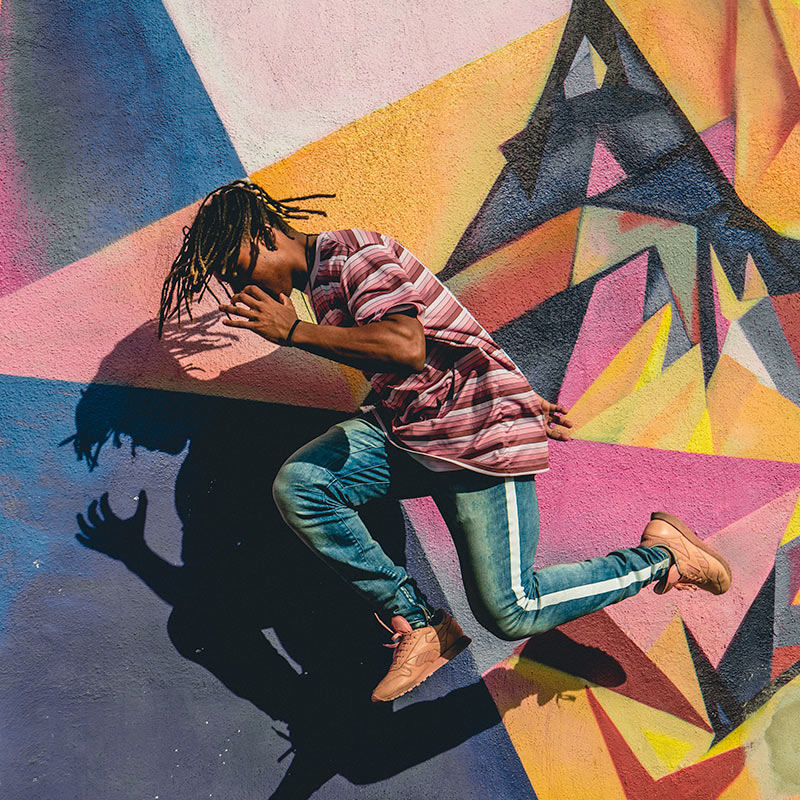

Jim helped me reframe it. The key isn’t that you play a note; it’s how you play it. It’s MIDI velocity. How hard you hit the key, how aggressively you strike the string. Instead of just triggering a clip, you map that velocity data to a parameter, like the brightness of a light, the speed of an animation, or the size of a geometric shape.

Suddenly, it’s not a light switch; it’s a dimmer. It’s expressive. It’s connected.

The New, Sane Plan

After exploring the bleeding edge, we landed on a practical, powerful starting point I can build right now, with the gear I already own.

- The Goal: An expressive, single-projector show.

- The Hardware: My Alienware 17 R4, no graphics amplifier needed (for now). It’s more than capable of this.

- The Software: Stick with Synesthesia or Resolume for now.

- The Technique: Master expressive MIDI mapping. Go deep on velocity and CC messages. Make the visuals feel like an extension of the instrument.

And just because I can’t leave well enough alone, I spent some time today tinkering with a program called NestDrop. It’s promising, and the visuals are wild, though I’m still wrestling with the audio settings. The experimentation never really stops.

The ghost isn’t gone. But for the first time in almost a decade, I feel like I’m the one doing the haunting. We’ve got a map, we’ve got a mission, and we’re finally turning the obsession into something real.

What about you? Are any of you messing around with live visuals for your music? What tools are you using? Drop a comment below—I’d love to hear what rabbit holes you’ve fallen down.

Comments

This post currently has no comments.